The increasing volume and complexity of cyberattacks requires organizations of all sizes to secure network architecture proactively. Threat modeling is essential in order to make the shift from reactive to proactive security. The threat modeling process involves identifying objectives and optimizing network security by discovering vulnerabilities and developing mitigations for the threats that may impact a particular system or network.

Artificial intelligence can be a useful cybersecurity tool, particularly for detecting patterns and providing rapid insights using technologies like machine learning, deep learning and natural language processing. These capabilities make it especially valuable for under-resourced cyber professionals.

How can AI be valuable for threat modeling?

AI can help automate the process of manually mapping components (individual elements represented in a threat model) with potential threats and countermeasures to mitigate those threats. This helps cybersecurity professionals to identify their attack surface more accurately. AI can also rank the potential threats, allowing security architects to prioritize mitigation.

What kind of data can be used to train AI models for better detection of threats and countermeasure techniques?

The data which can be used to train and deploy the AI models may include the following:

- Attack technique dataset - This dataset may include information about past attacks, techniques and known vulnerabilities.

- Network log dataset - This dataset may include events about the system itself, as well as normal and abnormal traffic details in the logs.

- Vulnerability dataset - This dataset includes information about system and software vulnerabilities, including their severity and risk factors, which are publicly available by credited sources like MITRE and OWASP.

- Threat intelligence dataset - This dataset may include recent information about known threats, vulnerabilities, tactics and techniques.

Benefits and Limitations of Using AI

Some benefits of using AI for threat modeling include:

- Automation and efficiency - AI can automate certain aspects of threat modeling, like mapping of threats and security requirements, based on the vulnerability dataset and threat intelligence dataset provided to the model. It can also provide analysis and risk assessment which can save time and resources.

- Continuous real-time monitoring - AI models can be trained so that the AI-powered systems can continuously monitor activities, allowing them to detect and alert the security teams about potential threats existing in the system in real time. This enables rapid response and provides less window time for the attackers to exploit, while giving more window time for security professionals to take countermeasures.

- Adaptive defense - Providing the AI models with up-to-date data can help them learn from past attacks and provide defense strategies. This adaptability strengthens the overall security posture of the organization.

- Scalability - AI models can scale effortlessly to handle large and complex datasets, which empowers organizations to continuously monitor networks and their environments.

- Enhanced threat detection and threat intelligence - Using high computational processing GPUs and CPUs can help AI algorithms excel in processing the various kinds of datasets. This can help in identifying patterns, anomalies, and indicators of compromise that might have been missed by traditional rule-based systems. It can also provide insights on emerging threats, vulnerabilities, and attacker techniques which can help make well-informed security decisions and prioritize the security efforts.

However, using AI does have limitations:

- Data Quality- AI algorithms can be biased when the data provided is not properly cleaned, and when the quality, quantity, and diversity of the data used to train the AI models are not efficient and comprehensive.

- Human Expertise - AI should be seen as a complementary tool rather than replacement for human expertise. Security professionals with enough domain knowledge and experience still play a crucial role.

- Adversarial Attacks - AI will not be aware of zero-day vulnerabilities as its knowledge depends on the data fed to train the models. If the data provided is not up-to-date, then the results will be biased and accurate security measures will not be taken. This could impact the model’s performance and effectiveness in threat modeling.

Since the accuracy of AI-generated models is dependent on the data the AI is fed, it is possible to be presented with false negatives and false positives. Therefore, while AI can aid in identifying vulnerabilities, provide countermeasures and help in automation, human expertise is crucial for verification, validation and in-depth analysis of the results generated by the models before making any important security decisions.

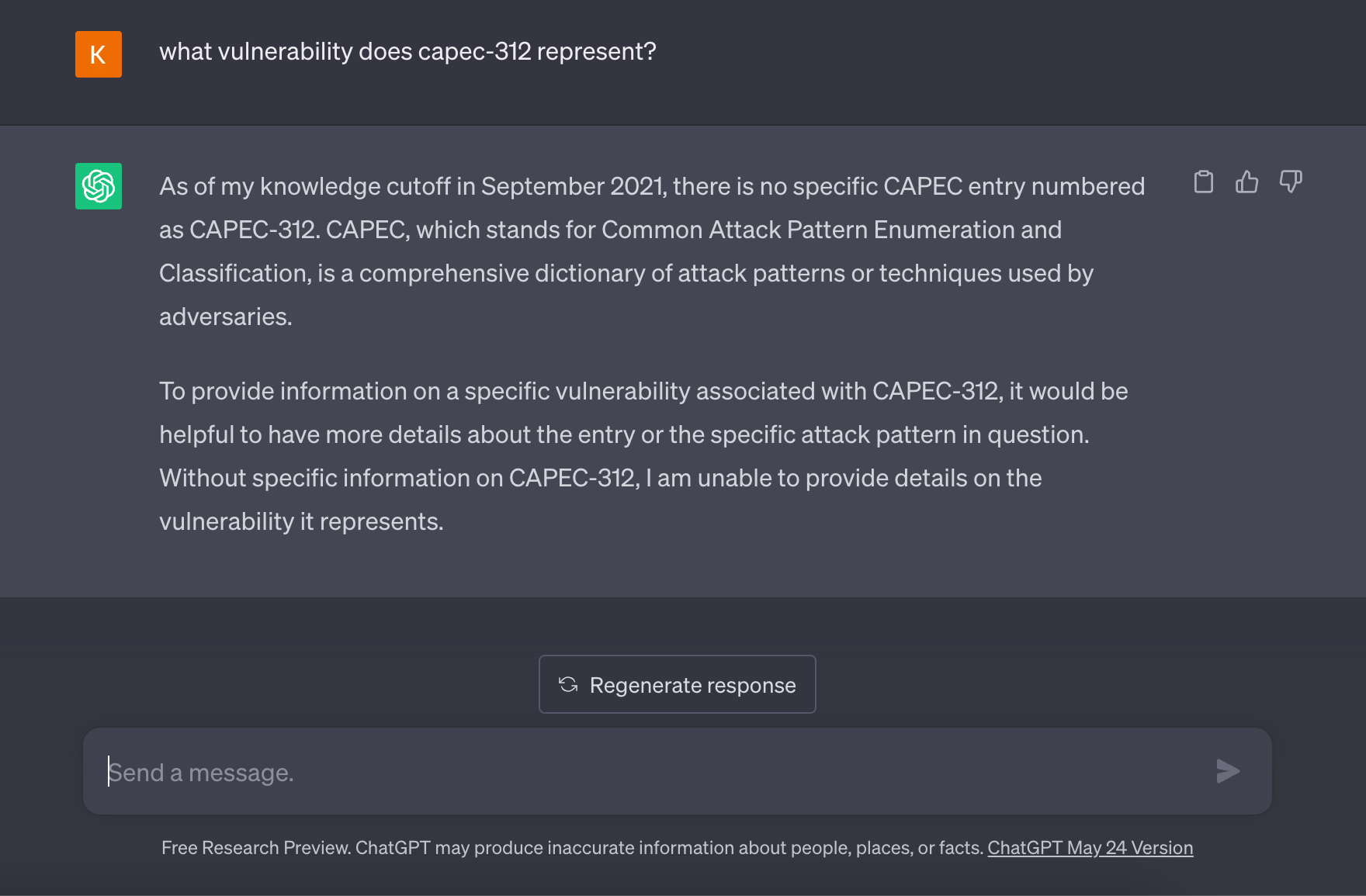

Here is an example of ChatGPT provided by OpenAI. I asked ChatGPT - “Which vulnerability does CAPEC-312 represent?” Its answer is in the image below.

This means that ChatGPT does not contain up-to-date information.

In summary, AI can augment human capabilities, automate tasks, and provide valuable insights that can significantly improve threat modeling efforts. However, the use of AI should not be seen as a substitute, but should be used in conjunction with human expertise and good cybersecurity practices to get the best possible results.